Haskell for JavaScript and Python developers

If you come from JavaScript or Python, you may have a very specific idea of what programming is. In this tutorial, I will try to show you another way to think about programming in general. Contrary to JS, Haskell is a declarative language with a strict and static type system. You may think that it makes Haskell really rigid, complex, and verbose, but I hope at the end of this tutorial you change you mind maybe a little bit and understand types are not your enemies.

If you already know Haskell but still want an exercise, go directly to the 3rd chapter

Before we start: Lambda Calculus

This chapter is very theorical, but important nonetheless. It shows the base of Haskell and it will help you all the time to understand complex functions. Haskell is a functional and declarative language. These 2 aspects of the language are very important. It is deeply inspired from lambda calculus which we will show in a bit.

Declarative languages, and lambda calculus

Declarative means that a program is not seen as a list of things to do, but as a description of how the thing we want to compute is. This means when you say a = someExpression then you exactly say that "a is someExpression". This is not "put the value of someExpression inside a memory labeled a", this last sentence is how you understand = sign in imperative languages.

This means there is some kind of inherent "immutability". You never put values in memory places, you always say what are things, and this will stay the same forever. This does not mean it will always return the same result, only that for the same input it will give the same output. This concept of "only changing depending on the input" is why declarative languages are intresting. They are very predictable.

Functional language

Functional means we will treat functions as normal values, that we can create, modify, take as parameters, or return as value. This concept is really interesting as it gives us built-in command, strategy, or even builder patterns (for those of you that like object oriented design patterns hehe), but also with a bit of syntactic sugar, a very powerful way of writing complex programs.

The most famous example of a function thaken as parameters is the function map. map takes 2 parameters:

- a function that transform objects

- a list of those objects

and it returns a list containing objects transformed with the given function.

Lambda calculus

One of the first (if not the first) declarative programming language is the lambda calculus. In lambda calculus, there are only 3 constructs:

- Variables (names), like

a,b,x,f,jeanclaude... - Lambda abstractions, like

. You can interpret these as "function that takes an input called

xand returns something that has the formE(some expression with maybexused in it). Lambda abstractions gave their name to "anonymous functions" in modern languages. When you define a "lambda" it comes from here. - Applications, like

. You can interpret them as "function call". In this example, we called function

fwith expressionEas first parameter.

A bit of semantics

The semantic of a language is a set of rules to compute a program. For example, in a language like JavaScript, there is a precise definition of variable declaration, or even how you must compute a whole program.

;

This line will roughly do this:

- allocate a place in memory

- give it the name

xso that we can access this place again - put the numerical value

0in it

And if there were multiple lines, we would execute the actions of each line in order (providing we don't do branching). So there is a concept of a "reading head" that goes forward after each action. The semantic of JavasScript is really complex, there are whole papers and thesis about it, but it has one like all other languages and knowing it is important to understand why things work.

Lambda calculus (and also Haskell) also have a semantic. There a 2 rules (yes only 3 constructs and 2 rules and you have a turing complete language !), but we will focus on ony one rule, as the other rule is here to de-ambiguate things when multiple expressions have the same name.

This rule is called (beta reduction), but don't fear its name it is a very simple "search and replace" rule. For example, to compute this expression using the

rule in this lambda calculus expression (extended with arithmetic operations):

we would take the expression , and replace every occurrence of

with

, resulting in the new expression

. Some expressions need multiple

steps to be computed. For example this one:

For convenience we will reduce it from the inner-most application to the outer-most. The first will take the expression

and replace every

with

, leaving us with the expression:

Then, the second reduction we will perform would be reducing , resulting in

. The full expression once this reduction is done is this:

Then do the same with the expression :

And finaly reduce the last application:

And we have , we successfuly computed "double twice the value 1". Everything is computable using this technique, you just need a lot of

. Knowing this is not that useful when you program in real world, but it is helpful when you need to compute yourself part of a program to understand how it behaves. The same applies to imperative languages, sometimes you just have to execute your lines in your head to understand why there is a bug for example.

Exercise

Try reducing these expressions:

In , the value

is bound to the name

but

never appears in the lambda's expression so it is never used.

Yes, never reduces to a value, it always loops infinitely !

You may think would loop infinitely too because it contains

, but since

disappears we don't need to reduce it, and never loop.

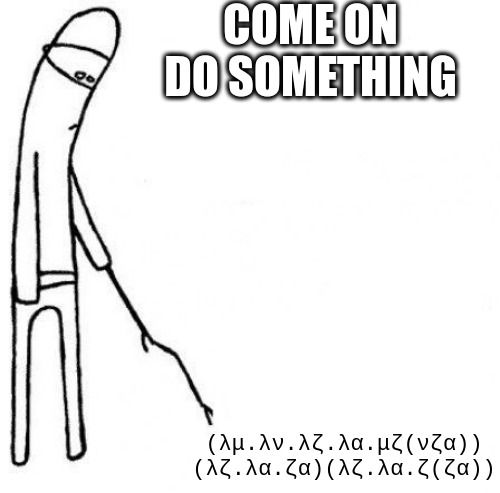

If you wondered, the expression in this chapter's illustration is equivalent to , and it reduces to:

Which is equivalent to . Basicaly, it applies the function

(Zeta) 3 times on a base value

(Alpha). If you want to know more about this, search for Church encoding.

This is all you have to know about lambda calculus. Again, this may seem like useless trivia, but it is the heart of Haskell semantic, and we will do reductions sometimes.

Haskell basics

Install via GHCUp

In order to play with Haskell, you first need to install it on your system. Follow this guide to install it. For windows users, I recommend installing it on a Linux VM using WSL2, because my examples are crafted for linux users, but do as you want as long as you can run ghci.

Try ghci

In the terminal, launch the ghci command. It is a REPL, you can write any Haskell expression and it will evaluate it and print the result. Try it out:

ghci> 1+1

2

ghci>

You can change the prompt to anything you want. For the rest of this tutorial, I'll change the prompt to λ>:

ghci> :set prompt "λ> "

λ>

Expressions

In Haskell, everything is an expression, even the most complex program is just one big expression. Try to evaluate some:

λ> 10 < 5

False

λ> "hello" ++ " world"

"hello world"

λ> ['h', 'e', 'l', 'l', 'o']

"hello"

λ> False || True

True

λ> "false" && True

<interactive>:2:1: error: [GHC-83865]

• Couldn't match type ‘[Char]’ with ‘Bool’

Expected: Bool

Actual: String

• In the first argument of ‘(||)’, namely ‘"false"’

In the expression: "false" || True

In an equation for ‘it’: it = "false" || True

My my, what happened ? Why did ghci insult us ? Let's look at the error. Could not match type [Char] with Bool. This means Haskell expected a value of type Bool (a boolean) but got a value of type [Char]. It even tells us where: In the first argument of (||), namely "false". This means the value "false" needed to be of type Bool, but its type is (obviously) [Char] (or String, since they are synonyms).

Everything has a type

As we saw earlier, Haskell expressions have types. And values are never implicitly converted. If your value is of type String, it will remain a String, unless you transform it with a function.

To see the type of an expression, you can use the :t ghci command:

λ> :t "hello"

"hello" :: String

λ> :t 2*2 > 0

2*2 > 0 :: Bool

λ> :t ["i", "am", "lily"]

["i", "am", "lily"] :: [String] -- list of strings

λ> :t 'i'

'i' :: Char

λ> :t 2*2

2*2 :: Num a => a

Wait wait wait... What is this again ? The type of 2*2 seems to be overly complicated for a simple multiplication. Why is it not Int or Number ? It has to do with polymorphism. Don't worry about that for now, just know when a type start with a lowercase letter, it means it's like a generic.

Why am I showing this now and not in the polymorphism section ? Because if you are curious, you will try :t on things I did not show. And it is good ! Go for it !

Use the :t command a lot, in languages with well designed type systems, knowing the type of things is often all you need.

Functions

As said earlier, functions in Haskell are like other objects. They are the base of programs. Programs are just a big composition of functions.

Let's play with some of them:

λ> length "wiwiwi" -- gives the length of a list

6

λ> length [1, 2, 3]

3

λ> not True

False

λ> negate 10

-10

λ> head "king"

'k'

λ> tail "cat"

"at"

If you come from other languages, you may be surprised, because we don't "call" functions with the usual function(parameter) syntax. Well, this is inherited from lambda calculus, applying a function is just writing the function and the parameter next to each other: (function parameter).

They have types too. The "function" type is sometimes also called "arrow" type, and is noted TypeOfInput -> TypeOfOutput.

λ> :t not

λ> :t lines

Of course, applying a function to a value gives another value, which type is its output type:

λ> :t not False

not False :: Bool

λ> :t lines "one\ntwo\nthree"

lines "one\ntwo\nthree" :: [String]

λ> lines "one\ntwo\nthree"

["one", "two", "three"]

Operators are functions too ! Look:

λ> :t (&&) -- to treat operators as normal objects, wrap them in parenthesis

(&&) :: Bool -> Bool -> Bool

λ> (&&) True False -- use && as a function instead of an infix operator

False

Hmm, there are two arrows. This is weird, usualy functions that take two inputs, in other languages, are typed like (TypeA, TypeB) -> TypeOutput... Well, actualy this has to do with something called Currying, and we will talk about it later. But for now, just assume we just define multiple-parameters functions like so: Type1 -> Type2 -> Type3 -> ... -> TypeOutput. So yeah, the AND boolean operator takes two booleans and returns a boolean.

To call a multi-param function, simply put its parameters one after the other:

λ> take 5 "hello world"

"hello"

Polymorphism

You may have seen that I only showed Bool, String, Char... They are concrete types. But in Haskell, you can define objects that can have multiple types at once !

Parametric polymorphism

Parametric polymorphism has many names. In languages like C# and Java, they are called generics. In C++ they are called template types (because C++ does nothing like others do). The simplest example is the id function:

λ> :t id

λ> id "something"

"something"

λ> id 10

10

λ> id True

True

id function takes an object and returns it unchanged. This is why its type is a -> a: It takes an object of any type, and returns and object of the same type. In fact, there are as many id functions as there are types, and the compiler just choses the right one depending on its input ! One object, multiple forms (hence the name polymorphism).

We say that a is a type variable.

Polymorphic types

Types can be polymorphic too, and we already used a polymorphic type before: the list. This type is a bit special, because it has no name but a specific syntax: [a]. Yes, you have it, [a] is "a list of elements of type a". And a is generic, it can be anything.

A [Char] and a [Bool] are different types, but there are functions that can work on any list. Like the function take, or the operator (++):

λ> :t take

λ> :t (++)

take needs an integer (Int), and a list of any type ([a]) and returns the firsts n elements of the list, so also a [a]. (++) takes two lists and chain them, so its type is pretty self-explanatory.

List is not the only polymorphic type, as you may have thought. One other very popular polymorphic type is the tuple. Tuples are collections of values of different types. For example, a point in a 2D space can be represented as a tuple of 2 Float: (Float, Float). But you can also want to associate person names with their wealth: (String, Float).

λ> (-3, 12) -- coordinate of some 2d point

(-3, 12)

λ> ("Nicolas", 10000000) -- wealth of Nicolas

("Nicolas", 10000000)

Functions on tuples are interesting:

λ> :t fst

Here you can see that fst has 2 type variables: a and b, but it only returns an object ot type a. Can you guess, using only the type of this function, what it does ?

Since we know nothing about the type a, we cannot produce one from nothing. This means we need to take it from somewhere, and the only object of type a that the function gets is the first component of the tuple.

We can deduce that fst returns the first component of the given tuple.

Let's try guessing what other functions do only by their type:

func1takes two lists, and pairs their elements one by one, creating a list of pairs. Its name iszip.func2takes a list of pairs, and separate the pairs, returning two lists, one ofas and one ofbs. Its name isunzip.func3takes a list of lists, and chain all the lists together one after the other. Its name isconcat. You may not have the same type if you check it on ghci using:t, but it does the same (it's just more powerful in reality).func4takes a tuple and swaps its elements. It is the functionswap.

As you can see, types encode a lot of information. One can manage to know what a function will do only by looking at its type ! Well, it's not always true, for example && and || are both of type Bool -> Bool -> Bool, but you get the idea. It is so powerful that if you search for a function in a package or the standard library you can search by type using Hoogle search engine. Try it, search for, let's say, a function of type a -> [a]. You should get two useful functions: one that creates an infinite list of the same object, and one that creates a list with a unique element.

Ad hoc polymorphism

Remember the type of 2*2 ? It was a bit complicated: Num a => a. Haskell type system has something called type classes. If you know some object oriented languages, you can see them as "interfaces". They are a bit more powerful than interfaces but for now it is a good analogy.

What ghci tells us here, is that 2*2 can be any type that can act like a number. The Num is the name of the type class, and it is the "type class of all numbers".

To know more about a specific type class, use the :i command:

λ> :i Num

type Num :: * -> Constraint

(*) :: a -> a -> a

It tells us that Num defines multiple objects:

- operators:

(*)(+)and(-) - functions:

negate,abs,signum,fromInteger

:i even showed you some types that are Num (Double, Int, ...) and they look like number types to me so it makes sense ><

There are many more type classes, here is a non-exhaustive list:

| Name | Objects | Description |

|---|---|---|

Eq | (==) (/=) | Types that can be tested for equality |

Ord | (>) (<) (>=) (<=) compare | Types that can be ordered |

Enum | succ pred | Types that have a concept of successor and predecessor |

Bounded | minBound maxBound | Types that have lower and upper limits |

Show | show | Types that can be printed as text |

Read | read | Types that can be parsed from text |

The problem of polymorphism

Sometimes, an expression is not explicit enough, and Haskell cannot deduce its type. Let's say you wanted to parse an integer value and print it right after. You may write something like:

λ> show (read "123")

"*** Exception: Prelude.read: no parse

But it gives you a runtime error. This is because the read function has type Read a => String -> a, meaning it can output any type that can be parsed, and the function show has type Show a => a -> String, meaning it can take as input any type that can be printed. Since there are multiple type that can do both, ghci cannot know which type to chose, and it chose wrong. We wanted an Int but we got a () (more on this type later).

λ> show (read "()")

"()"

To avoid errors like this, you can force the type of an expression by annotating it using the :: Type syntax. Here:

λ> show (read "123" :: Int)

"123"

Now it works, because we forced the type of read "123" to be Int, so Haskell knew it had to select the read :: String -> Int version, and not another version of read.

Bindings

When you want to re-use an expression (most of the time it's because this expression is a function), you can give it a name. It won't evaluate it, but it will allow you to use it multiple times without re-writing it every time.

λ> x = 2

λ> y = x+x

λ> z = y*y

λ> z

16

Be careful, bindings are not variables. We call them often variables, but they are only names given to expressions. Because of that, you can have weird behaviors in Haskell with code that seems it should work in other languages:

λ> a = a + 1

λ> a

-- and your ghci hangs (do CTRL+C to stop the last evaluation)

Why did it do this ? Well, because I gave the name a to the expression a + 1, and tried to evaluate a. Remember the exercises on how to reduce lambda calculus expressions ? Well Haskell works exactly like this. Why don't you try to reduce a ?

Haskell entered an infinite loop, it will never reduce to a value !

Bindings as expressions

When you write name = expression in a file, it creates a global binding. If you only want this binding to appear in an expression, you can use let expressions. Here are some examples:

λ> let x = 2 in x+x

4

λ> x

<interactive>:2:1: error: [GHC-88464] Variable not in scope: x

x only exists in the first expression !

Modules

Programs and libraries in Haskell are written in files ending in .hs. These files are called Haskell modules. You can define multiple bindings in a module, and loading it will give you access to these bindings. Try writing some bindings in a file let's say Bindings.hs:

one = 1

two = one + 1

myname = "lily"

And load the module in ghci using command :l Bindings.hs. Now play a bit with this module:

λ> one

1

λ> myname

"lily"

You can filter what you want to expose from the module, but for now we will just expose everything.

Importing a module

Importing a module is done using the keyword import and naming the module to import.

-- import functions relative to Char type

You can also import modules in ghci:

λ>

λ> :t ord

λ> ord '0'

48

Functions are expressions too

For now we only saw how to use functions. But we also need to know how to create functons. The syntax is very simple. \parameter1 parameter2 ... -> expression.

λ> inc = \x -> x+1

λ> inc 10

11

λ> hyp = \x y -> sqrt (x*x + y*y)

λ> hyp 3 4

5.0

It looks almost the same as lambda abstractions in lambda calculus, the \ and -> replace the λ and . . Functions are exactly like values. The only problem in ghci is that we cannot show them. So if you want to create functions, always give them a name.

Functions are normal expressions, so you can also use them in let expressions:

λ> hyp = \x y -> let square = \x -> x*x in sqrt (square x + square y)

λ> hyp 6 8

10.0

Ok, here it is useless, but we will see why it is useful later with recursion.

Function syntactic sugar

It can be tiring to always write functions like this. So creating functions and giving them a name, we have syntactic sugar. It is "a syntax that is translated to another syntax" just to help writing things. No new semantic, just code rewrite at compilation.

λ> inc x = x+1

λ> inc 10

11

λ> hyp x y = let square x = x*x in sqrt (square x + square y)

λ> hyp 3 4

5.0

Conditions, and pattern matching

Until now, we never made choices. Always only one path to take, one expression to reduce to. But some functions need multiple choices. For example, the Fibonacci sequence is defined differently given its rank.

The sequence has the same expression for all , but for

or

, the expression changes.

Conditions

Conditions are branches based on boolean expression this means you need a boolean expression (i.e. a comparison) and at runtime, it will chose a path based on the value (True or False) of this expression.

If then else

λ> if True then "yes" else "no"

"yes"

λ> if not True then "yes" else "no"

"no"

λ> syracuse n = if even n then div n 2 else 3*n + 1

λ> syracuse 3

10

λ> syracuse 10

5

if then else is an expression, so it has a type. Let's define a function that just "applies" an if then else and see which types does Haskell compute:

λ> ite condition yes no = if condition then yes else no

λ> :t ite

This type is really interesting. First, we see that condition must indeed be a Bool expression. But then we see a type variable. So the then and else branches can take expressions of any type BUT there is a catch. We see that there is only one type variable, so both branches need to contain expressions of the same type. That makes sense, if it is an expression it must have only one type at runtime (even if this type can vary depending on the context at compile type). What would happen if, let's say you could define this function:

λ> intorstring c = if c then 10 else "yo"

and then do something like:

λ> (intorstring didIDoTheDishes) + 1

At runtime, the program would not work half of the time, because if you did not do the dishes before running the program, it would output a String, and Strings are not numbers. Now you start to understand why stricly typed programs are easier to work with once you understand types. No more weird bugs like in JavaScript or Python ><

Guards

Sometimes, a function can have a lot of different cases. We could write a tree of if then else (if then else (if then else (...))), but it would be really difficult to read really quicly. So Haskell has syntactic sugar (yes, again) to help us. Let us say you want to give a description of an earthquake based on its score in the Richter scale. Using if then else, it would be written as:

richterClass n = if n < 2 then "micro" else if n < 3 then "minor" else if n < 4 then "slight"

else if n < 5 then "light" else if n < 6 then "moderate" else if n < 7 then "strong" else if n < 8

then "major" else if n < 9 then "great" else "extreme"

It is absolutely unreadable ! Now let's see how it would work using guards:

richterClass n

| n < 2 = "micro"

| n < 3 = "minor"

| n < 4 = "slight"

| n < 5 = "light"

| n < 6 = "moderate"

| n < 7 = "strong"

| n < 8 = "great"

| n < 9 = "major"

| otherwise = "extreme"

This is much more readable, and can also be extended way more easily. This is syntactic sugar because if just translates to a cascade of if then else like the one we saw earlier so that it tries the first branch, and try the next ones in order until one succeeds. The last condition is otherwise. which translates to just True. You can even see this using ghci:

λ> otherwise

True

So the last branch translates to if True then "extreme" else error "non exhaustive patterns ...". You can see that it does translate to this by creating a function without a "catch all" condition.

bad n

| n < 10 = "less than ten"

Now let's call bad:

λ> bad 9

"less than ten"

λ> bad 42

*** Exception: <interactive>:(18,1)-(19,16): Non-exhaustive patterns in function bad

bad is called a partial function. We try to never create partial functions because they can crash. This is why I will not talk in details about functions like error. We will see how to use the type system to handle errors later.

Pattern matching

We saw that conditions work on any boolean expression. They are great, but the compiler cannot reason about them. For example, let's say we want to take the head of a list OR zero if it is empty.

λ> headOrZero l = if null l then 0 else head l

This function works perfectly fine, because of course if l is not null then head l will never crash. BUT, the condition you put in your if can be absolutely anything, and the compiler will never complain if the expression has the right type. Let's say you created a function nul :: [a] -> Bool but it checks another condition. If you do a typo and write nul instead of null in the definition of headOrZero, the program will compile perfectly but it may crash now because we may get head l when l is empty.

Of course it would absolutely never happen, right ? You are a good programmer, you know how to write code, and you even have linters to help you... right ? Wrong. This happens all the time. We are human, we make mistakes, even in things we do all days. I know how to walk and avoid stuff in my vision, but sometimes my toe hits the chair. And I cry. And I hate myself... Anyway, don't rely on conditions only. Sometimes we can do better. And this better is pattern matching.

In Haskell, some types have a "shape". For example, tuples have the shape (firstVal, secondVal), lists can be either [] (an empty list), or composed of a head attached to a tail: head:tail. And you can tell Haskell what to do depending on the shape or a value.

case of

The first kind of pattern matching we will see is the case of expression.

case expr of

pattern1 -> expression1

pattern2 -> expression2

-- ...

For example, if we want to implement the null function, we can write:

null l = case l of

[] -> True

h:t -> False

We match against the shape []. If the list has this shape, it is empty, so null l is True. If it has the h:t shape (a head followed by a tail), well, it is not empty since it has at least one element (the head).

Pattern matching can also be used to destruct values (access their parts). For example, the partial head function can be written:

head l = case l of h:t -> h

This function is partial because it has no branch handling the [] shape. And Haskell can warn us. If it did not warn you, you can enable the warning using this ghci command: :set -fwarn-incomplete-patterns

λ :set -fwarn-incomplete-patterns

λ head l = case l of h:t -> h

<interactive>:34:10: warning: [GHC-62161] [-Wincomplete-patterns]

Pattern match(es) are non-exhaustive

In a case alternative: Patterns of type ‘[a]’ not matched: []

Another win for Haskell's type system.

We can also use pattern matching on tuples, let's implement the functions fst and snd which respectively get the first and second component of a tuple:

fst t = case t of (f, s) -> f

snd t = case t of (f, s) -> s

Now try to implement the same functions but for tuples of 3 elements:

fst3 t = case t of (a, b, c) -> a

snd3 t = case t of (a, b, c) -> b

thd3 t = case t of (a, b, c) -> c

You see that you don't have any warning for these functions. This is because the pattern used catches all possible shapes of a tuple. The case of is exhaustive, our function is total.

Variable patterns

When you see a name in a pattern, the name itself is a pattern. This pattern is like saying "whatever shape is at this place, I don't care I just want to bind the value to a name". So saying:

case l of

h:t -> h

Means: "what shape has h is irrelevant, I just need it to be at the head of l, and I name it h because I need to use it later.". So you can have a pattern with only a name, and it will catch everything. See this new version of null:

null l = case l of

[] -> True

blerp -> False

We don't care about the shape of the list if it is not [], so we can just put a variable there.

Number patterns

Numbers also have shapes, 0, 1, 2048... so you can pattern match against numbers too:

iszero n = case n of

0 -> True

n -> False

You see that ? It's almost like the Fibonacci sequence, we define our expression when n is something. Let's translate the mathematical definition in Haskell:

f n = case n of

0 -> 0

1 -> 1

n -> f (n-1) + f (n-2)

Multiple definition

When a function contains a single case of expression, it can be easier to write it using multile definitions. You simply define the function multiple times with a different pattern each time. Yes, in fact, function parameters are patterns !

f 0 = 0

f 1 = 1

f n = f (n-1) + f (n-2)

Try it, write these lines in a file and load it in ghci using :l TheFile.hs. You can even write them in a let expression:

g n = let f 0 = 0; f 1 = 1; f n = f(n-1)+f(n-2) in f (2*n)

We created a function that computes Fibonacci(n*2) by defining the fibonacci function inside g's expression.

Indentation rules

Before we continue, we need to talk a bit about expression delimitation. You can do whatever you want with indentations as long as you follow 2 rules:

- An expression MUST be at least one column further than the start of its definition block (blocks of the form

pattern = expressionorpattern -> expression. - Items that are part of the same item sequence MUST start at the same column. Item sequences are things like

let def1 = ... def2 = ... indefinitions,case ... of pat1 -> ... pat2 -> ..., items defined inwhereclauses, and a last thing: instructions indoblocks. (But we will see this last one much later).

-- this is totaly UNREADABLE but valid haskell

f n =

let

-- definitions of x and y start at same colum

x

-- every other line of the definition

-- of x are more on the right

=

n+1

y = n-1

in -- keywords are part of an expression, they follow the expression rule

-- the 'in' and 'case' keywords here belong to the top definition

-- (the definition of function 'f')

case

-- n is only an expression it goes

-- wherever it pleases

n

of

-- 0 and n start on the same column

-- 1 and 2 (the expressions associated with each definition)

-- are futher right

0 ->

1

n -> 2

-- this is not valid haskell

fibonacci n = case n of

0 ->

0 -- the expression is not at least 1 column futher on the right

1 -> 1

-- this last definition is not on the same column as the 0 and 1 defs

n ->

-- the let and in are not indented enough since they belong to the

-- expression associated with the pattern 'n ->'

let

f1 = fibonacci (n-1)

f2 = fibonacci (n-1)

in

f1

+

f2

-- everything else is totaly fine, just 3 errors

-- only 2 if you count the fact that correcting the second error will correct the third

For the rest of this tutorial I will try to follow this syntactic rule:

- If it has only one definition, and the definition fits on one line, don't indent

- If it needs multiple lines, or it does not fit on one line, indent only what is needed by 2 spaces

For example:

function exp = case exp of

pat1 -> exp1

pat2 -> case exp2 of

pat21 -> exp21

pat22 ->

a

kind

of

long

expression

pat1 -> exp1

function2 param = let

pat1 = case exp1 of

pat11 -> exp11

pat12 -> exp12

pat2 = exp2

pat3 = exp3

in someExpr

RPN calculator level 1

So what now, we played a bit with core concepts of Haskell, it was fun (for me at least), but we didn't do actualy useful programs. Only toy examples. A full program will need more than that ! So let's do it, we will build something useful ! A Reverse Polish Notation calculator !

Why this ? Because it is very comprehensive. It involves parsing, error handling (yeah I know if you come from JS it may be a weird concept 😇), inputs/outputs, software architecture ...

Once done, the software will look like this:

> 10 20 +

30

> dup

30:30

> 2 /

15:30

> +

45

> 0 /

Error: division by zero at symbol '/'

> drop

[]

> drop

Error: empty stack at symbol 'drop'

So let's begin by opening the file RPN.hs

First things first, a model of our world

Before doing anything with code, we need to think about what is the think we want to build. Reverse Polish Notation is a way to write arithmetic expressions without the need of parenthesis. It does so by having an unambiguous syntax where every symbol is read from left to right, and an action is done for each symbol. Numbers are put on a stack, and other symbols do things with the stack. Here is an example:

1 2 + dup *

will be evaluated like this:

- push 1 on the stack

- push 2 on the stack

- take out the 2 topmost elements (1 and 2), add them (3), and push the result (3) on the stack

- take out the topmost element (3) and push it twice (duplicate the stack top)

- take out the 2 topmost elements (3 and 3), multiply them (9), and push the result on the stack

In the end, the stack contains the number 9, we computed the expression .

We see that the program will have:

- a stack

- a RPN expression

- an expression is a list of RPN symbols

- a RPN symbol is either a number, or an arithmetic operation, or

dup(for now)

- a function to modify the stack based on a given expression

The stack is the simplest type. Since we only deal with numbers, we can just define a stack as a list of numbers. Why a list ? Because it has the same shape as a stack:

- to push, you add an element at the head of the list :

element : stack. - to pop, you can match against the

top : stackpattern.

So we can just create a type alias:

type RPNStack = [Double]

This is exactly how String is defined by the way. You can check with :i String:

λ> :i String

type String :: *

type String = [Char]

-- Defined in ‘GHC.Base’

To represent our RPN symbol, we need to define our own type. In Haskell, most types are defined using the data syntax. It is used like this:

data Type

= Constructor1 AttributeType11 AttributeType12 AttributeType13

| Constructor2 AttributeType21 AttributeType22

| Constructor3

-- ...

A type consist of multiple variant, also called "constructors", and each variant can have 0 or more attributes. For example, our RPN symbol type could be defined as:

data RPNSymbol

= Number Double

| Add

| Sub

| Mul

| Div

| Dup

| Drop

A RPNSymbol can be a Number, and a Number has 1 attribute of type Double, because it must contain which number to push. A RPNSymbol can also be an operation (for now we only defined 5 of them).

Printing

Let's try to play with this type:

λ> :l RPN.hs

λ> :t Add

Add :: RPNSymbol

λ> Add

<interactive>:56:1: error: [GHC-39999]

• No

• In a stmt of an interactive GHCi command: print it

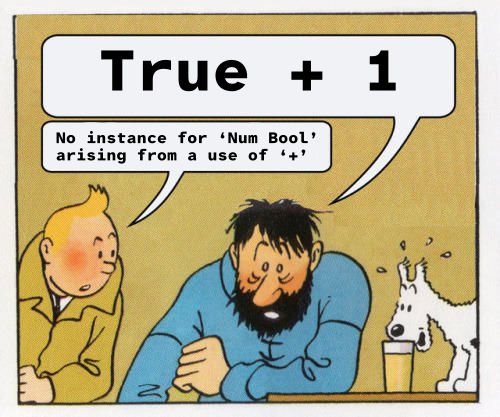

Oh, ghci did not like it because RPNSymbol cannot be printed, it does not implement the Show typeclass. And Haskell tells us by saying • No instance for ‘Show RPNSymbol’ arising from a use of ‘print’, which means "the print function (used by ghci to print the result of an expression) needs to implement Show, but RPNSymbol does not".

Let's implement this function then ! Implementing a typeclass is just a bit of syntax:

show symbol = ...

We start with instance Class Type where, and then define the needed functions. In the case of Show, the only function to define is show. Don't forget to indent your functions !

So how would we implement it ? Pattern matching would be perfect here:

show (Number n) = show n

show Add = "+"

show Sub = "-"

show Mul = "*"

show Div = "/"

show Dup = "dup"

show Drop = "drop"

You can see here that constructors of our type can be used as patterns, this is why pattern matching is so useful. Did you see also that we called show on the attribute of Number ? It's ok to do this because Double implement show, so the show on the right is the Double version and not the RPNSymbol version.

Now try again:

λ> :l RPN.hs

λ> Add

+

λ> Mul

*

λ> Number 5

5.0

λ> [Number 1, Dup, Add]

[1.0,dup,+]

Did you see how constructors with attributes are used ? They are like functions, you simply put attributes next to the constructor. And in fact, they really are functions. Check the type of Number:

λ> :t Number

Number :: Double -> RPNSymbol

How would you implement show using case of instead of multiple definitions ?

show symbol = case symbol of

Number n -> show n

Add -> "+"

Sub -> "-"

Mul -> "*"

Div -> "/"

Dup -> "dup"

Drop -> "drop"

It is the same with lists, the (:) operator is a constructor for the list type, so it is usable as a pattern, AND as a function that creates a list with a head and a tail:

λ> :t (:)

(:) :: a -> [a] -> [a]

λ> [1,2,3] == 1:2:3:[]

True

λ> case [1,2,3,4] of (x:y:rest) -> (x, y, rest)

(1,2,[3,4])

Try implementing the functions swap, which swaps the two first elements of a list if the list has at least two elements, and does not modify the list eitherway.

swap l = case l of

(x:y:xs) -> y:x:xs

l -> l

Parsing

Now that we can see a RPNSymbol, it would be nice to read it. We won't implement the Read typeclass, because it is a bit difficult. We will simply implement a readRPNSymbol function:

Your turn! Try to implement it yourself. It should do this:

- If the string is an operation symbol, the result is the corresponding operaton

- If not, then try reading the string as a number

- Some output examples:

λ> readRPNSymbol "+"

+ -- remember, this is the textual representation of Add constructor

λ> readRPNSymbol "12"

12.0 -- this is the textual representation of Number constructor

String literals ("blabla") are also valid String patterns. At the moment, we don't care about total functions. Your function can be partial. We will make it total soon.

readRPNSymbol string = case string of

"+" -> Add

"-" -> Sub

"*" -> Mul

"/" -> Div

"dup" -> Dup

"drop" -> Drop

s -> Number (read s)

Evaluating a symbol

For now we can only show and parse our symbols. But wouldn't it be nice to evaluate them ? Make them actualy do something to the RPN stack ? Let's define a function:

Same as before, we will enumerate all possible patterns for the type RPNSymbol :

evalRPNSymbol symbol stack = case symbol of

-- ...

For a number, we simply put it on the stack (try implementing the Number case):

evalRPNSymbol symbol stack = case symbol of

Number n -> n : stack -- numbers are just pushed on the stack

For the Add case, we need to get the first and second elements of the stack. We can do it with pattern matching:

evalRPNSymbol symbol stack = case symbol of

Number n -> n : stack -- numbers are just pushed on the stack

Add -> case stack of (y:x:stack) -> (x + y) : stack

Now try implementing the other cases:

evalRPNSymbol symbol stack = case symbol of

Number n -> n : stack -- numbers are just pushed on the stack

Add -> case stack of (y:x:stack) -> (x + y) : stack

Sub -> case stack of (y:x:stack) -> (x - y) : stack

Mul -> case stack of (y:x:stack) -> (x * y) : stack

Div -> case stack of (y:x:stack) -> (x / y) : stack

Dup -> case stack of (x:stack) -> x : x : stack

Drop -> case stack of (x:stack) -> stack

You feel like it is really annoying and verbose ? Don't worry, I do two. But for now, this is all we can do with what we know. It will be better soon. You can still try the function now:

λ> :l RPN.hs

λ> evalRPNSymbol (readRPNSymbol "1") []

[1.0]

λ> evalRPNSymbol (readRPNSymbol "+") [1,2]

[3.0]

Loops using recursion

Now that we can process a single RPNSymbol, it would be nice to process a whole RPN expression. So first let's define what is a RPNExpression.

type RPNExpression = [RPNSymbol]

Simple, right ? Now let's read a whole expression. An expression is entered as a String by the user, and is a list of symbols separated by spaces. Ok, so we could first separate our input String into multiple Strings separated by space with the function words, then process each word using readRPNSymbol. This is a nice approach, chain together functions that do each a little bit of the whole process, and you have the whole process. We could implement our own words function, and if you feel like it be my guest, but we are not there yet.

So our whole process resembles this:

readRPNExpression s = toSymbols (words s)

You can write this function in your module. What we need now is the function toSymbols. First of all, let's try to compute its type.

- We know its output must be a

RPNExpression(aka[RPNSymbol]) since its result is the result ofreadRPNExpression. - Its input is the result of

words, so it is a[String](list of strings)

So toSymbols has type [String] -> [RPNSymbol]. It seems obvious that this function reads each String of the input list into its RPNSymbol equivalent. So something like a for each ? No, we don't have these in Haskell. We will need to think different recursive.

A recursive function is a function that calls itself. Since it calls itself, the "self call" (recursive call) will do the same work again, and again, and again... until some exit condition is true and we just stop calling the function recursively. Oh but I just described a loop, didn't I?

To think recursive, we first need to understand how the function behaves with certain simple inputs (e.g. the empty list, a list with one element ...), and try to find a general case (which is often the recursive case), and at least one specific case which does not involve recursion (which we call the base case or terminal case) to stop the recursion once reached. It's like finding the termination condition of a loop.

So, let's think about the empty list first:

toSymbols [] = _what

Write this line and load the module in ghci...

λ :l RPN.hs

[1 of 2] Compiling Main ( RPN.hs, interpreted )

RPN.hs:5:16: error: [GHC-88464]

• Found hole: _what :: RPNExpression

Or perhaps ‘_what’ is mis-spelled, or not in scope

• In an equation for ‘toSymbols’: toSymbols [] = _what

• Relevant bindings include

Valid hole fits include

[] :: forall a. [a]

with [] @RPNSymbol

(bound at <wired into compiler>)

|

5 | toSymbols [] = _what

| ^^^^^

Failed, no modules loaded.

Oh, it seems that our ghci does not like the name _what it says it's a hole. Holes are names starting with a _ and not bound to any value. When Haskell compiler gets an "unknown variable" error on a hole, it just tells us "oh I found a place where I think you wanted to put something, but did not know what, its type is the type. Oh and by the way, I found some values that could fit this hole...". Here, we see that _what is of type RPNExpression, and it found two values that could fit: [] (the empty list, of course), and mempty (a special object from the Monoid typeclass, which, for lists, is the empty list).

Thanks ghc, now that I think of it, if we have no String to parse, there should be no RPNSymbol, so an empty list should be the right value of toSymbols [] !

toSymbols [] = []

Nice, we can parse empty Strings ! Try it:

λ> :l RPN.hs

λ> readRPNExpression ""

[]

Now let's think about the other cases:

toSymbols (c:[]) = _1

toSymbols (a:(b:[])) = _2

toSymbols (a:(b:(c:[]))) = _3

For the first case, we will want to transform the string a to a symbol:

toSymbols (c:[]) = readRPNSymbol c

Oh, but since it's a list, we should build it in a list. We could just wrap our expression in [ ], but let me write it in a way that will show a pattern later:

toSymbols (c:[]) = readRPNSymbol c : []

It is ok to write it, (:) chains a head to a tail, here, the tail is just the empty list. Now let's think about the second and third case. Well it should be something like this:

toSymbols (b:(c:[])) = readRPNSymbol b : (readRPNSymbol c : [])

toSymbols (a:(b:(c:[]))) = readRPNSymbol a : (readRPNSymbol b : (readRPNSymbol c : []))

Hm... wait, there seem to be a repeating pattern here... let me re-arrange a bit:

toSymbols (c:[]) = readRPNSymbol c : []

toSymbols (b:(c:[])) = readRPNSymbol b : (readRPNSymbol c : [])

toSymbols (a:(b:(c:[]))) = readRPNSymbol a : (readRPNSymbol b : (readRPNSymbol c : []))

You see how the third case contains the second case ? The input of case _3 is c followed by the same input as case _2. And the output of case _3 is really just readRPNSymbol c followed by the output of case _2 ! We could rewrite case _3 based on case _2. Remember, the = symbol in Haskell truly means "equivalent", so if you see an occurrence of readRPNSymbol b : (readRPNSymbol c : []), it is strictly equivalent as toSymbols (b:(c:[])).

toSymbols (a:(b:(c:[]))) = readRPNSymbol a : toSymbols (b:(c:[]))

But wait, the same applies to case _2 and case _1 :

toSymbols (b:(c:[])) = readRPNSymbol b : toSymbols (c:[])

What about case _1 ? Well, remember the base case ? It is equivalent to the empty list, and what is readRPNSymbol c attached to ? The empty list. So we can rewrite it also:

toSymbols (c:[]) = readRPNSymbol c : toSymbols []

Generalize a pattern

We see that everytime we encounter a list of the shape x:xs (a x followed by the rest of the xs), we transform the x (readRPNSymbol x), and chain the transformed value to the transformation of the rest (toSymbols xs). This means instead of enumerating all kinds of lists, we could just write:

-- don't forget the base case

toSymbols [] = []

toSymbols (x:xs) = readRPNSymbol x : toSymbols xs

Try it, you'll see that all cases we unfolded by hand are really just particular cases of this general one.

Wow, we now have a function that parses a whole RPN expression.

λ> readRPNExpression "10 10 +"

[10.0,10.0,+]

Hiding auxiliary functions

If you think about it, the function toSymbols has no other purpose outside of readRPNExpression, but right now it still pollutes our scope. People can access it, and its name may collide with other names in other modules. This is not clean.

We could define it in a let expression in readRPNExpression:

readRPNExpression s =

let toSymbols [] = []

toSymbols (x:xs) = readRPNSymbol x : toSymbols xs

in toSymbols (words s)

But we can do better, with a special syntax for functions. The where clause:

readRPNExpression s = toSymbols (words s)

where

toSymbols [] = []

toSymbols (x:xs) = readRPNSymbol x : toSymbols xs

In a where clause, you can put everything that needs to be in the scope of the function, and keep your main expression clean.

Evaluate a whole expression

Now we need a function that evaluates an expression.

Evaluating a whole expression is pretty simple, we just need to evaluate each symbol from left to right. If we have an expression with 3 symbols: [a,b,c], we need to evaluate a then b then c. Unfolding this will result in:

evalRPNSymbol c (evalRPNSymbol b (evalRPNSymbol a []))

Let's do the same as before: doing a few cases by hand.

-- no symbol means nothing to evaluate, the stack does not change

evalRPNExpression [] s = s

-- evaluate the symbol c

evalRPNExpression (c:[]) s = evalRPNSymbol c s

evalRPNExpression (b:(c:[])) s = evalRPNSymbol c (evalRPNSymbol b s)

evalRPNExpression (a:(b:(c:[]))) s = evalRPNSymbol c (evalRPNSymbol b (evalRPNSymbol a s))

It feels odd, it's like we do have a pattern, but it is backward. In the last example, we had a tree of operations that "combine the processing of the head with the processing of the tail". The pattern had the same order as the input ([a,b,c] gave (:) a ((:) b ((:) c []))). But now, the pattern seem to give eval c (eval b (eval a s))).

Let's think about it for a second. evalRPNExpression expr stack is the result of evaluating an expression expr on a stack stack. This means the function takes a stack that could already be in some state.

If we rewrite the equations, maybe we can see a pattern:

evalRPNExpression [] s1 = ( s1)

evalRPNExpression (c:[]) s2 = evalRPNSymbol c ( s2)

evalRPNExpression (b:(c:[])) s3 = evalRPNSymbol c (evalRPNSymbol b ( s3))

evalRPNExpression (a:(b:(c:[]))) s4 = evalRPNSymbol c (evalRPNSymbol b (evalRPNSymbol a s4))

If you compare the right hand side of lines 2 and 3:

evalRPNSymbol c ( s2)

evalRPNSymbol c (evalRPNSymbol b s3)

You see that the only difference here is s2 and evalRPNSymbol b s3. If we transform s2 with evalRPNSymbol b s3, we get the same value. So the 3rd line is the same as the second line but with s2 = (evalRPNSymbol b s3). And when two things are equivalent, we can change one with the other. So we can rewrite line 3 like this:

evalRPNExpression (b:(c:[])) s3 = evalRPNExpression (c:[]) (evalRPNSymbol b s3)

Once again, we see a recursive pattern emerge. If we transform the pattern (b:(c:[])) with the pattern (sym:syms), we can rewrite our line:

evalRPNExpression (sym:syms) s3 = evalRPNExpression syms (evalRPNSymbol sym s3)

Let's verify this by unfolding evalRPNExpression [a,b,c] [].

evalRPNExpression [a,b,c] []

= evalRPNExpression (a:(b:(c:[]))) []

= evalRPNExpression (b:(c:[])) (evalRPNSymbol a []) -- by recursive case

= evalRPNExpression (c:[]) (evalRPNSymbol b (evalRPNSymbol a [])) -- by recursive case

= evalRPNExpression [] (evalRPNSymbol c (evalRPNSymbol b (evalRPNSymbol a []))) -- by recursive case

= evalRPNSymbol c (evalRPNSymbol b (evalRPNSymbol a [])) -- by terminal case

In the end, the function looks like this:

-- never forget the base case

evalRPNExpression [] s = s

evalRPNExpression (x:xs) s = evalRPNExpression xs (evalRPNSymbol x s)

Iterative form to recursive form translation

evalRPNExpression is interesting. It is really simple to translate it in an imperative language. It would be the same as doing this in JS:

In JS, we use a mutable variable called stack, that we modify at each loop iteration. In Haskell, we carry the modified stack in the function's parameters. This is how you would translate a loop into a recursive function. If you have difficulties thinking about a recursive implementation, try first doing the iterative version, and then translate it.

If the iterative function needs temporary values, like this one:

You can see it as two functions. One having only the parameters, and the other having the parameters AND the temporary values:

-- the function we will expose

power x n = powerAux x n 1

where

-- the helper function, with "r" as the temporary variable

powerAux x 0 r = r

-- look at how n = n - 1 translates to "call powerAux with n-1 as the new n"

-- same with r

powerAux x n r = powerAux x (n - 1) (r * x)

If you need to practice, try translating these functions:

factorial n = factorialAux n 1

where

factorialAux 0 r = r

factorialAux n r = factorialAux (n - 1) (r * n)

fibonacci n = fibonacciAux n 0 1

where

fibonacciAux 0 a b = a

fibonacciAux n a b = let tmp = a in fibonacciAux (n - 1) b (b + tmp)

Printing an expression

We can already show a RPNExpression because it is a list, and we can show a list of Showable things. But this is not pretty. The textual representation of an expression is not [sym1,sym2,...], it is sym1 sym2.... So we need to define a pretty printing function that does exactly this.

Printing an expression is as simple as parsing it: You take a list of symbols, and print them one by one, separated by spaces. For the parsing part, we used the function words to split a String by spaces, for this one we will use unwords, which ... does the opposite: join String with spaces between them. So we should have the same function shape as for parsing, reversed:

-- because RPNExpression is not a type but a type alias, we cannot implement a type class for it

-- we then need our own function

showRPNExpression expr = unwords (toStrings expr)

where

toStrings expr = _impl

Try doing it yourself now ;)

toStrings [] = []

toStrings (x:xs) = show x : toStrings xs

In the end

Now that we have all these little functions, we can combine them to create an evaluator that takes an exprssion as a String and gives the result of the expression... or crash if it encounters any error.

evalRPN s = evalRPNExpression (readRPNExpression s) []

No REPL, a lot of repetitions, no proper error handling, but still, it works pretty well! Good job!

RPN calculator level 2

In this level, we will refactor the code to make it more robust by using generic functions and error handling.

Factoring behavior using higher order functions

Ah, another term that no one understands! Thank you mathematicians!..

Higher order functions are functions that take functions as parameters, or give functions as return value.

So why "higher order"? Because if we think about functions as objects that transform values, a function that transforms another function is 1 degree higher in the "power ranking" of functions, because it not only modifies data but also code.

To see why they are useful, let's look at two functions: readRPNExpression and showRPNExpression:

readRPNExpression s = toSymbols (words s)

where

toSymbols [] = []

toSymbols (x:xs) = readRPNSymbol x : toSymbols xs

showRPNExpression expr = unwords (toStrings expr)

where

toStrings [] = []

toStrings (x:xs) = show x : toStrings xs

If you look closely at toSymbols and toStrings, they look almost the same. The only two differences are: their name, and the function they apply to each element.

toSymbolsappliesreadRPNSymboltoStringsappliesshow

But they share a common behavior: they apply a function to all elements of a list. What if we implemented a function that does the same thing, but the applied function is given as a parameter? We could use it like this:

-- v the function to apply v the list

toSymbols strings = applyToAll readRPNSymbol strings

toStrings symbols = applyToAll show symbols

First, we need to know its type. It takes a function, and a list, and returns a list:

First, try to know the type of applyToAll in the context of toSymbols. toSymbols has type [String] -> RPNExpression, because it takes the result of words (so, a String split by spaces), and returns the list of parsed RPNSymbols (aka, a RPNExpression).

So knowing this:

toSymbols strings = applyToAll readRPNSymbol strings

The type of applyToAll MUST be:

-- type of readRPNSymbol -> type of strings -> result type of toSymbols

Doing the same process, we can know the type of applyToAll in the context of toStrings:

-- type of show -> type of symbols -> result type of toStrings

We have a nice pattern here, we take a function, a list, and get back a list. The types also follow the same pattern. The function takes elements of the same type as in the input list, and outputs elements of the same type as in the output list. How could we write a type that does the same, but "for all types a and b"?

Now that we have its type, we can implement it. It should not be too difficult. It's the same shape as toSymbols and toStrings, but the function to apply is given as parameter:

applyToAll func [] = _1

applyToAll func (x:xs) = _2

applyToAll func [] = []

applyToAll func (x:xs) = func x : applyToAll func xs

Nice, you implemented your first useful higher order function! This function is so useful that Haskell has it already... yeah we reinvented the wheel, but it's not fun if I give you all the answers at once!

In Haskell, this function is called map, and it does exactly the same thing as our applyToAll, and even has the same type! It is so useful that even JavaScript and Python have it.

The implementation of toSymbols and toStrings are so simple now, that we don't even need to give them a name. We can directly write their implementation in place:

readRPNExpression s = map readRPNSymbol (words s)

showRPNExpression expr = unwords (map show expr)

Folding

evalRPNExpression also has a generic implementation. Its name is foldl. You may already know it, because it exists in JavaScript under another name: reduce. Here is the type of foldl:

It is called fold because it takes a sequence of objects and computes a single value by combining them together from first to last (from left to right, hence foldl).

foldl f z [a,b,c] == f (f (f z a) b) c

Does this pattern seem familiar to you? It should. It is very similar to:

evalRPNSymbol c (evalRPNSymbol b (evalRPNSymbol a []))

If evalRPNSymbol had its parameter inverted, we could write it as:

evalRPNSymbolFliped (evalRPNSymbolFliped (evalRPNSymbolFliped [] a) b) c

Replacing evalRPNSymbolFliped with f, and [] with z, we get back f (f (f z a) b) c.

So we could write evalRPNExpression as:

evalRPNExpression expr s = foldl evalRPNSymbolFliped s expr

How to get evalRPNSymbolFliped? Simply wrap evalRPNSymbol in a lambda.

foldl (\a b -> evalRPNSymbol b a) s expr

Could you implement foldl yourself ? You can use our first evalRPNExpression and abstract out the accumulating function.

foldl f x [] = x

foldl f x (y:ys) = foldl f (f x y) ys

Functions as return value

The truth about Pyramids functions

Remember here when I talked about functions with multiple parameters? Well I lied. There is no such thing as a function with multiple parameters. Really, there is only one unique function type, and it is the arrow type (->). A function is a -> b and that's it.

So how does Haskell allow functions like map? After all, map takes 2 parameters... Wrong! Let me show you.

Do you see it now? Yes, the -> type is called right-associative. This means that this function: f :: a -> b -> c -> d is the same as f :: a -> (b -> (c -> d)). If we were to translate this in english, we would say something like "f is a function that takes an object of type a and return a function that takes an object of type b and return a function that takes an object of type c and returns an object of type d". This will explain a lot of weird things about the syntax.

When you "call" a "multi-param" function, you actualy "call" the returned functions 1 by 1. When you do:

λ> add a b = a+b

λ> add 1 2

3

It is doing this:

λ> add = \a -> (\b -> a+b)

λ> (add 1) 2

3

It looks a lot like lambda calculus now, doesn't it? Where lambda abstractions always have 1 binding name and could "output" other lambda abstractions. Here we clearly see it is the same, the first function "outputs" a second function.

Do you see the parenthesis around (add 1) in the second expression? Because of how functions work, you don't "apply" a function to "multiple parameters". You apply a function to 1 parameter, and if the result is another function, you can apply this new function to another parameter. And you can stop anytime. For example, let's create a function that increment a number:

λ> inc = add 1

λ> inc 2

3

It worked! We can bind the resulting function to a name, and use it like any other function. This is called partial application.

Back to map. The thing with this way of thinking is that we now see map as "A function that takes a (a -> b), and transforms it to a function ([a] -> [b])". We can apply map partialy to get a new function:

λ> successors = map inc

λ> :t successors

λ> successors [1,2,3]

[2,3,4]

You can even partialy apply operators (yes, since they are functions too):

λ> inc = (+1) -- here we apply partialy 1 to the operator +

λ> inc 2

3

So why is it useful? Before I answer this, let me show you one operator:

f . g = \a -> f (g a)

This is called the composition operator. If you give it two functions, it create a function that applies the first function on the result of the second. It's like a "then" operator. If you want to apply inc "then" show, you can write show . inc.

λ> incThenShow = show . inc

λ> :t incThenShow

λ> incThenShow 0

"1"

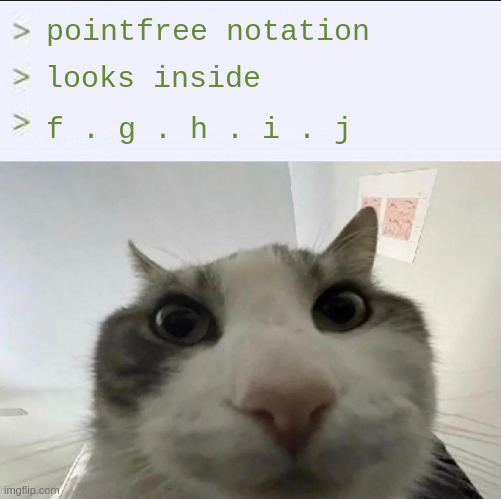

Did you see that functions defined using function combinators have no explicit parameter? This style of defining functions is called pointfree notation. Yes, you write a lot of points because of the composition operator, but points here are another name for arguments. Don't ask me why!

Back to our RPN calculator. These 2 functions below are kind of the same as f (g x). If you partialy apply map, remember, you get a function from lists to lists. So map readRPNSymbol is a function from [String] to [RPNSymbol]. And words is a function from String to [String]. The output type of words is the input type of map readRPNSymbol. The same applies to showRPNExpression.

readRPNExpression s = map readRPNSymbol (words s)

showRPNExpression s = unwords (map show s)

Could you define these functions in pointfree notation?

readRPNExpression = map readRPNSymbol . words

showRPNExpression = unwords . map show

One last for the road. There is a function which would seem useless, but is actualy pretty common thing in functional languages. The flip function. It is defined like this:

flip f a b = f b a

It simply reverses the order of parameters. If we look at its type we clearly see it:

If we reveal unnecessary parenthesis, we see it even better:

It takes a function that takes an object of type a then one of type b, and transforms it to a function that takes an object ot type b and then one of type a. Remember evalRPNExpression? Could you get rid of the lambda by using flip?

evalRPNExpression expr s = foldl (\a b -> evalRPNSymbol b a) s expr

evalRPNExpression expr s = foldl (flip evalRPNSymbol) s expr

Bonus point if you manage to use flip again to make the function pointfree:

evalRPNExpression = flip (foldl (flip evalRPNSymbol))

As you can see with evalRPNExpression, this notation is not good for all functions, so don't do this for everything please. If you want to play with pointfree notation, there is a website called https://pointfree.io/ that converts any Haskell expression for you.

Now let's talk about evalRPNSymbol:

evalRPNSymbol symbol stack = case symbol of

Number n -> n : stack -- numbers are just pushed on the stack

Add -> case stack of (y:x:stack) -> (x + y) : stack

Sub -> case stack of (y:x:stack) -> (x - y) : stack

Mul -> case stack of (y:x:stack) -> (x * y) : stack

Div -> case stack of (y:x:stack) -> (x / y) : stack

Dup -> case stack of (x:stack) -> x : x : stack

Drop -> case stack of (x:stack) -> stack

Could we factorize our binary operations? It seems like the only thing changing is what operation to perform on x and y. Define a function in a where clause that takes a binary operation and results in the same expression, and use this helping function instead of the long case stack of....

evalRPNSymbol symbol stack = case symbol of

Number n -> n : stack -- numbers are just pushed on the stack

Add -> bin (+)

Sub -> bin (-)

Mul -> bin (*)

Div -> bin (/)

Dup -> case stack of (x:stack) -> x : x : stack

Drop -> case stack of (x:stack) -> stack

where

bin op = case stack of (y:x:stack) -> op x y : stack

It is as easy as this.

Handle parsing errors with Maybe

For now, our functions are partial. This means they are not defined for all inputs, and of course, if we give them such input, they will crash. We don't want this anymore. Our program needs to be robust and recover after errors!

For this, we will introduce a new type:

data Maybe a

= Nothing

| Just a

Oops, we did not see this syntax yet, did we? Everything is in place, we have data, we have 2 constructors, but... there is this little a in front of the type's name. This even feels like Maybe is a function? So what is a here? A parameter?

To answer that we will first talk about type of types. (No, I did not stutter).

Type of types

When I talked about parametric polymorphism, and about lists specificaly, I said that we can have "lists of elements of a any type". In mathematics, when we talk about some function, we often say that is "f of x". This means "function f applied to the object x". In Haskell, this "of" is the same for lists. When I say "list of

Int", it is the same as saying "apply the function list to the object Int". And this special "list" function, when applied to the type Int, gives back another type: [Int] (list of Int).

If you don't trust me, why don't you check with :i []:

λ> :i []

type List :: * -> *

data List a = [] | a : [a]

-- Defined in ‘ghc-prim-0.10.0:GHC.Types’

-- Defined in ‘ghc-prim-0.10.0:GHC.Classes’

Look at the two first lines:

type List :: * -> *

data List a = [] | a : [a]

The first line tells us the signature of the type List. It is almost the same as the signature of a function, right? But we never encountered the "type" *. This is because it is the "type of all types". A list really is a function from type to type. And it makes sense, because a list cannot be "just a list", it needs to be a "list of some type".

The second line shows the definition of the type. It starts with data, like we did when creating the type RPNSymbol, but the type name is not directly followed by the = symbol. It has a type parameter. Let's try another one. The tuple type. (a, b). In fact, its name is (,) (without the type parameters).

λ> :i (,)

type (,) :: * -> * -> *

data (,) a b = (,) a b

-- Defined in ‘ghc-prim-0.10.0:GHC.Tuple.Prim’

data (,) a b = (,) a b So (,) is the true tuple type and it takes 2 type parameters. This makes sense, we can have tuples of Int and String, or Bool and Char... any 2 types. And fait enough, because it takes 2 type parameters, its "type" is * -> * -> *.

In Haskell, we differentiate types and type of types. The type of types is called kind.

Back to our Maybe type. This type follows the same rules:

λ> :i Maybe

type Maybe :: * -> *

data Maybe a = Nothing | Just a

-- Defined in ‘GHC.Maybe’

Before Maybe, all other type functions we used were special, because they had special syntax. You don't define tuples like this: (,) Int String, but like this: (Int, String). The same goes for lists: [Int] instead of [] Int, and functions: Int -> String instead of (->) Int String. Though the function syntax is easier to understand if you think about it like it's an infix operator.

But now, all other types we will encounter will have proper function-like syntax. So if you want a "Maybe of Int", you will write Maybe Int. Exactly like a function application.

Using Maybe

As you saw earlier, Maybe has two variants. Just, and Nothing. This type represents the possibility that a value does not exist. To see Maybe in action, we will create a new readRPNSymbol function that, instead of simply return a RPNSymbol, returns a Maybe RPNSymbol.

readRPNSymbol string = case string of

"+" -> Add

"-" -> Sub

"*" -> Mul

"/" -> Div

"dup" -> Dup

"drop" -> Drop

s -> Number (read s)

For now we will just copy the function and rename the copy, to avoid annoying type errors while we code:

readRPNSymbolSafe string = case string of

"+" -> Add

"-" -> Sub

"*" -> Mul

"/" -> Div

"dup" -> Dup

"drop" -> Drop

s -> Number (read s)

You see that it does not really work for now. The result expressions don't have the right type, we still send RPNSymbol. When we see a simple RPNSymbol that cannot fail, we can just wrap them with the Just variant.

readRPNSymbolSafe string = case string of

"+" -> Just Add

"-" -> Just Sub

"*" -> Just Mul

"/" -> Just Div

"dup" -> Just Dup

"drop" -> Just Drop

s -> Just (Number (read s))

Ok now let's try it:

λ> readRPNSymbolSafe "+"

Just +

λ> readRPNSymbolSafe "10"

Just 10.0

λ> readRPNSymbolSafe "blerp"

Just *** Exception: Prelude.read: no parse

Oh no... It did not work at all, so Maybe is just useless? No, it's just that we did not handle error case at all, we just changed the type of the result and wrapped everything in Just. So if read crashes, well, wrapping it in Just will not solve anything.

We need to use a function in the standard library, but not exposed by default. So we need to import a module in our own module. At the beginning of your module, write this:

This will import objects from the module Text.Read. This module contains this function:

You see, this function is like read, but instead of returning a simple object of type a (or crash trying), it returns an object of type Maybe a, and instead of crashing it will return Nothing. Try it:

λ>

λ> readMaybe "blerp" :: Maybe Int

Nothing

λ> readMaybe "10" :: Maybe Int

Just 10

λ> readMaybe "10" :: Maybe Bool

Nothing

No more runtime error! We can of course check if the parsing failed or not:

isJust mx = case mx of

Just x -> True

Nothing -> False

λ> isJust (readMaybe "blerp" :: Maybe Int)

False

λ> isJust (readMaybe "10" :: Maybe Int)

True

λ> isJust (readMaybe "10" :: Maybe Bool)

False

Now we need to modify readRPNSymbolSafe to use readMaybe. We must first try to read a number, and then wrap it in a Number variant if the parsing succeeded. And if not, just forward the Nothing.

readRPNSymbolSafe string = case string of

"+" -> Just Add

"-" -> Just Sub

"*" -> Just Mul

"/" -> Just Div

"dup" -> Just Dup

"drop" -> Just Drop

s -> case readMaybe s of

Just n -> Just (Number n)

Nothing -> Nothing

Now it should work:

λ> readRPNSymbolSafe "blerp"

Nothing

Yay! No more error! We can now replace readRPNSymbol with the definition of readRPNSymbolSafe. Just replace the old implementation with the new and test it:

λ> :l RPN.hs

[1 of 2] Compiling Main ( RPN.hs, interpreted ) [Source file changed]

RPN.hs:31:27: error: [GHC-83865]

• Couldn't match type ‘Maybe RPNSymbol’ with ‘RPNSymbol’

Expected: String -> RPNSymbol

Actual: String -> Maybe RPNSymbol

• In the first argument of ‘map’, namely ‘readRPNSymbol’

In the expression: map readRPNSymbol (words s)

In an equation for ‘readRPNExpression’:

readRPNExpression s = map readRPNSymbol (words s)

|

31 | readRPNExpression s = map readRPNSymbol (words s)

| ^^^^^^^^^^^^^

Failed, no modules loaded.

Of course GHC complains about the type change, and it's nice because if it did not we would have had another crash somewhere else! Because we don't have simple values now but values wrapped in Maybe. GHC tells us that it wanted a String -> RPNSymbol function, but got a String -> Maybe RPNSymbol.

We could simply change readRPNExpression's type to String -> [Maybe RPNSymbol], but what would it mean? A list of Maybe RPNSymbol? It does not make a lot of sense. We need to change the function readRPNExpression so that it fails if it reads a bad symbol (of course if a code file has only 1 error the whole compilation will fail).

So the type String -> Maybe [RPNSymbol] should be more appropriate, it encodes what we want: the parsing of an expression may fail. This does not change our problem... we need to change the function's implementation. Back to handmade code, no more higher order:

readRPNExpression = toMaybeSymbols . words str

where

toMaybeSymbols [] = _1

toMaybeSymbols (x:xs) = _2

readRPNExpression = toMaybeSymbols . words

where

toMaybeSymbols [] = Just []

toMaybeSymbols (x:xs) =

case readRPNSymbol x of

Nothing -> Nothing -- parsing the symbol failed, so we fail

Just sym -> case toMaybeSymbols xs of

Nothing -> Nothing -- parsing the rest of the list failed somewhere, so we fail

Just expr -> Just (sym:expr)

If you followed the tutorial to here, you should know what comes next... Could we implement a function that generalize toMaybeSymbols to not only work for readRPNSymbol but any function from a -> Maybe b?

Implement this function (let's call it traverseList).

traverseList f [] = Just []

traverseList f (a:as) =

case f a of

Nothing -> Nothing

Just b -> case traverseList f as of

Nothing -> Nothing

Just bs -> Just (b:bs)

Now we just have to refactor readRPNExpression using traverseList.

readRPNExpression = traverseList readRPNSymbol . words

evalRPN

Because we modified evalRPNExpression, It's the turn of evalRPN to fail. We have to refactor it to handle parsing errors:

evalRPN str = case readRPNExpression str of

Nothing -> Nothing

Just expr -> Just (evalRPNExpression expr [])

Now try evaluating RPN with syntax errors or not:

λ> evalRPN "1 duplicate"

Nothing

λ> evalRPN "1 dup +"

Just [2.0]

This concludes the second part, we saw how to factorize using higher order functions, and handle errors gracefuly. We are not done factorizing but for that we will need to talk about Functor, Applicative, and Monad in the next and final part. We will also (finaly!) talk about inputs/outputs and design a REPL.

RPN calculator level 3

Telling exactly what happened

For now, we have only one way to tell there was an error. This is the Nothing variant of the Maybe type. We may want to carry data about what was the error. For example, if there was a parsing error, we would like to know which symbol is the culprit.

We will create a type Result that can replace Maybe, but with an error variant carrying an error description as a String instead of just Nothing.

data Result a

= Error String

| Result a

For convenience, we would like to show values of this type, so we will implement Show for it. You can implement it yourself or derive the implementation:

data Result a

= Error String

| Result a

Deriving is kind of magic, it implements boring but useful traits automaticaly for our types. We will use it more often now, but keep in mind that some times you may want to implement traits yourself because the implementation matters.

Now let's rewrite the function readRPNSymbol so that it returns a Result and not a Maybe:

readRPNSymbol string = case string of

"+" -> Result Add

"-" -> Result Sub

"*" -> Result Mul

"/" -> Result Div

"dup" -> Result Dup

"drop" -> Result Drop

s -> case readMaybe s of

Just n -> Result (Number n)

Nothing -> Error ("Unknown symbol '" ++ string ++ "'")

You see now we can tell which symbol made the parsing fail! Try this:

λ> readRPNSymbol "10"

Result 10

λ> readRPNSymbol "nope"

Error "Unknown symbol 'nope'"

The type Result is a very common type, and it is so common that in Haskell, there is a similar type called Either that is used everywhere we need to carry a result from a source, or from another source. Either is defined as:

data Either a b

= Left a

| Right b

It is almost the same as Result, but instead of having a String for the first variant, we have a generic type. This allows people to use Either with their own error type, or even to carry an object that simply have two different shapes. We will now use the type Either String in place of our custom Result type. And yes, I just partialy applied a type function, Either is a function over types that needs 2 types, so Either String is a function that takes 1 type. I can now create a type alias:

type Result = Either String

And this new Result type will behave like the old one except now its variants are Left instead of Error, and Right instead of Result.

Ok, let's modify our functions to use this type instead now.

TODO

- explain how it is very difficult to refactor code when changing the type of our wrapping result

- show how it is sad that we had to modify our generic function

traverseListto work onEitherinstead - introduce

Functorto see that ourevalRPNfunction can be generic over theFunctorused - introduce

Applicativeto see that ourreadRPNExpression(more precisely ourtraverseListfunction) can also be generic over the functor - Emphasis on maintainability, because we may want our functor to become more complex one day maybe

Evaluation errors

TODO: from now on we use Either String instead of Maybe

Now that we handled parsing errors, we need to handle evaluation errors. We will use the same type (Maybe), and do the same as before: first refactor basic functions, then refactor bigger functions. Starting with evalRPNSymbol.