What is a programming language ?

I recently watched a video about whether HTML is a programming language or not. When watching it, I was really astonished to see that so many of programmers refuse to accept it. So I dug a little bit, to see that indeed a lot of us think that HTML is NOT a programming language. Look at this Reddit post from 2 years ago, people are really triggered by that claim. Same with comments of this Computerfile video. I agree, Reddit forums or YouTube comments are not the best representation of a population, but at least it shows that there really are some people adamant about the subject.

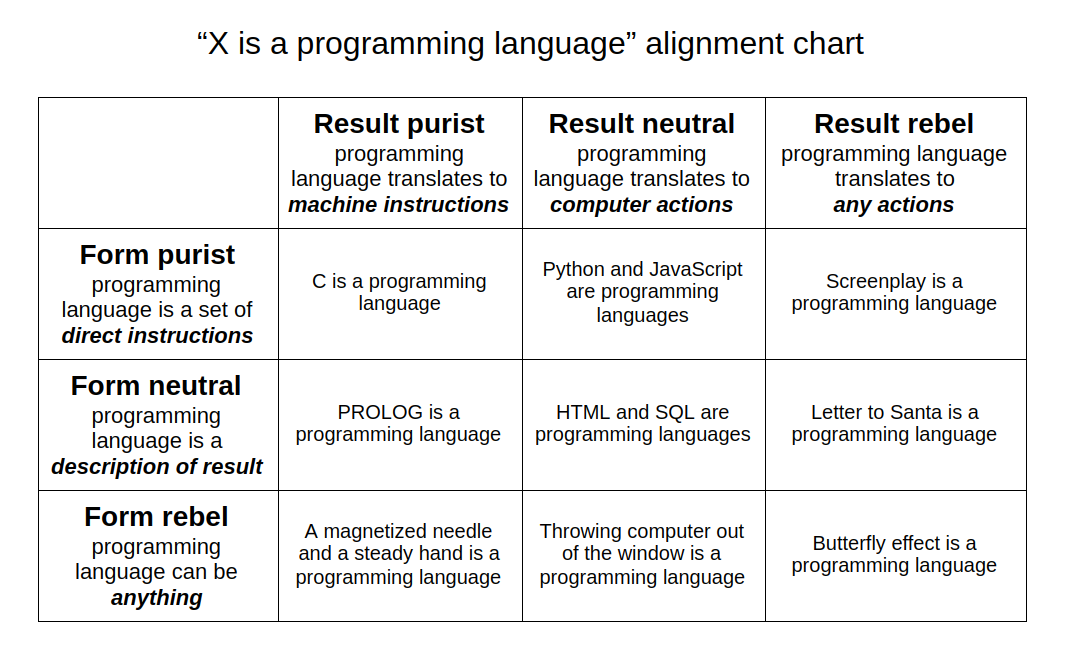

So it made me think a bit more about what were programming languages. Is there a formal definition of it ? If not, can we try to give one ? What is programming ? And ... Does it matter that much ?

I will use a lot of Wikipedia articles. They are not sources by themselves, but are good sum ups of the literature. Follow sources linked by the articles if you want to know more.

Read the definition !

Maybe we already have a good, strict definition of what is a programming language. Let's see what Wikipedia has to say:

A programming language is a system of notation for writing computer programs.

Ok, what is a computer program then ?

A computer program is a sequence or set of instructions in a programming language for a computer to execute.

A mutualy recursive definition, off to a good start ! Ok, the articles do develop a bit more. The programming language article even have a section about different definitions of this term. Specificaly, the section introduces the concepts of markup languages, and Turing Completeness.

Some articles/books I found targetting newcomers also include markup languages as programming languages (or at least , give definitions that include them):

- Principles of programming languages

- Introduction to programming languages

- What is a programming language

So it seems both visions exist.

The most common arguments against HTML as a programming language are answered in the video, but it does not go in depth to see what are the implications of these arguments. I want to talk a bit about 3 of them:

Describing algorithms (aka Imperative programming)

We often see people claiming that a programming language is one in which someone can express an algorithm in the imperative way. That is: expressing a sequence of instructions with conditional branching. This description rejects declarative languages, so yes, it excludes HTML (even though the video refuses to accept it :p). But the real problem of that claim is that it also rejects languages that we (and even people not happy with HTML) consider programming languages. For example, most functional or logic programming languages, like Caml, Haskell, Prolog... as they do not represent programs as sequences of instructions.

Let's see the famous Fibonacci sequence. In any imperative language, let's say C, you would write it like this:

int

We are saying what the computer must do. It must allocate space for some numbers (e.g. a, b, n, tmp), do some maths (e.g. +), move values in the allocated cells (e.g. a = b), loop while some condition is met...

in Haskell, it's totaly different:

fibonacci n

| n < 2 = n

| otherwise = fibonacci (n - 1) + fibonacci (n - 2)

We do not see any performed action, just "something equals something else". We tell the compiler what things are, and not what they do. The value of fibonacci n is n if n < 2, and a more complicated expression otherwise. We can't see loops, because loops would need mutable state (cells you can store values in) and a notion of sequence of instruction. Here we have a pure declarative expression. And no, strictly speaking, the function is not calling functions, or returning values in this scenario, we must read it as fibonacci of n is (...). Let's see another example:

a = 1 + a

This is a totaly valid expression in Haskell, we have a name, a, of type Int, which is equal to ... 1 + itself. Trying to evaluate a will result in an infinite loop, but we never ever "called" a function there.

So what now ? We can still exclude these languages from the definition, but modern versions of almost all imperative programming languages are adding declarative languages features, meaning this paradigm is seen as "programming" for a good part of the community at least.

Of course, you could argue that under the hood, there is a compiler that would transform a declarative program in an imperative one (after all, our CPUs are imperative). But the subject is not about "what the program will do under the hood", it is about "what you are writing". Even a simple <p>Hello World</p> will in the end tell the HTML rendering engine to put some text on the page, so it will trigger IO and syscalls as well.

Turing Completeness

Strictly speaking, a language is Turing complete if it can be used to simulate a Turing machine. A Turing machine is an abstract machine that can compute all computable things. This machine cannot really exist since it requires an infinite memory, and run for an arbitrary (maybe infinite) long time. But let's say one such machine could exist, then simulating a Turing machine would require your language to effectively handle potentialy infinite memory, and run for an infinite time.

Ok, so maybe not all programming languages are imperative. But surely they are all Turing Complete, right ? Well, first, no. In fact, very few langages actualy are turing complete if we use the formal definition.

- Strictly speaking, C is not turing complete as its specification needs a maximum size for memory addresses.

- In early days of FORTRAN (before Fortran 90), people could not even do dynamic allocation, every program needed to tell the compiler "i'm going to use at maximum X bytes of memory". This prevented even theoricaly Fortran to be Turing Complete. But it was the most used language back then, and allowed people to write the basis of what your machine is running on now.

- eBPF programs are staticaly validated, so some normaly valid programs will be rejected.

- All total functional programming languages are by definition turing incomplete, because you cannot write programs that don't terminate or that can end in an "invalid state".

But the real problem with turing completeness, is that it includes totaly random things:

LaTeX (which is considered a typesetting language, not programming language) is Turing complete.

Images are Turing complete (see the Piet programming language). Look at this beautiful brainfuck interpreter (yes it's a very colorful gif):

In fact, all esoteric languages are Turing Complete, and their purpose is to show that Turing Completeness is not a useful discriminator. Leave Turing alone, please.

The last question... Or is it ?

It leaves us with the third argument. "If HTML is a programming languages, then a Word document is a program ?". This one is tough to answer. After all, even if we did not write the document's content ourselves, these two file types describe roughly the same thing: "what text/picture/drawing do I want to display, and where ?".

You know what ? Maybe a Word document IS a program after all ? To find out, let me ask another question:

What is programming ?

Programming, is the composition of sequences of instructions, called programs, that computers can follow to perform tasks.

I believe this is where everyone stop reading the article and is happy that it only talks about sequences of instructions. Or maybe it is because the first section is named History ?

Wait, you don't need a computer to program ?

We don't need to read more than the first section's introduction to learn about:

- Music sequencers

- Automata

- Jacquard Loom (a weaving device) ???

What do all these things have to do with programming ?!

Well, you see, "programming" is really broad, and people programmed things way before computers were born. Since we have automation, people invented automata that behave differently given some input. And writing the good input to get the result you want is called programming.

Do you even need to know you're programming to program ?

In 2002, Richard Stallman talked at the International Lisp Conference. He gave an anecdote about how secretaries extended Emacs without even knowing "programming":

It was Bernie Greenberg, who discovered that it was. He wrote a version of Emacs in Multics MacLisp, and he wrote his commands in MacLisp in a straightforward fashion. The editor itself was written entirely in Lisp. Multics Emacs proved to be a great success—programming new editing commands was so convenient that even the secretaries in his office started learning how to use it. They used a manual someone had written which showed how to extend Emacs, but didn't say it was a programming. So the secretaries, who believed they couldn't do programming, weren't scared off. They read the manual, discovered they could do useful things and they learned to program.

These people, who thought they could not "program", did something most of us would never dare doing: they programmed useful things in Lisp !

The fun part is that you could see what they did as "just configuring their tool", so it would be called "infrastructure as code", or "configuration as code". Oh my, did we just reinvent (again) the wheel ?

In the end, it's all data

In the end, code is data, as much as pixel colors are. Before using electricity or magnetism, we stored programs in punched cards, exactly as we did before for music and images. And the only difference between them was "what these holes in the card mean".

Data is nothing without someone to give it a meaning. And I say someone because we are the ones giving meaning to things, not the computer. We also built computers using our own logic system. Since we are the ones saying what are things, and we like double meanings, of course bits and bytes can also have double meaning !

Disguised programs

Because of the polysemic nature of language, you can hide a program in a chunk of normaly "inert" data. Like an image, or simple ASCII text. I'll show some example of that.

PDF and ZIP

There is a tool called truepolyglot that allows you to create PDF files that are also ZIP files (and vice versa). Ok, these file formats do not describe programs... Or do they ? You can run DOOM in a PDF file, so imagine your totaly legit ZIP file suddenly running DOOM just because you renamed it PDF. Weird, but possible, just because you decided to look at the data in a different angle.

More examples

Look at this 100% printable ASCII text:

‘ PYj0X40HP [ j0X0Y50AO0YO0Y ‘0

Aa0Ya0Ab0Yi0Aj0Yj0Ak0Ym0YnrII0Y70A80Y80A90Y

=0Y >0 YGQZOyI &t < j0X40P [2 YIC ? ,42 AJ@$ <? ’20 ’

wBIj0X40P [2 YJC2AK@ ? ,6$?0 ’ wBJBBAAAuAa5he4 ‘i/

DZ2Fu4XR5gA7f ‘; u ?4} V8Mo5XU5Xg / Sx5XR7f ‘5

gO4DV7f ‘; u ?: @e : KC4XV7f ‘; u ?: @e3LU4XV7f ‘; u ?4 dX

: CA8Mo2 ~ L7@H6fx :? J5_n1 |r5 ‘ g1 | a5dm7fb3EH ;

jL7AO &

There is no extended ASCII, no UTF-8 multi-byte codepoint... not even a single control byte ! Good old plain text.

Well, this exact sequence of printable ASCII bytes is also, in the language of x86 CPUs, a shellcode.

Before 2008, we could also upload GIF files that were in fact JAR (An attack called GIFAR) in order to upload and execute Java code in websites that allowed you to upload images.

In fact, many arbitrary code execution attacks involve polyglot data at some point. The malicious code is hidden in a file, and it exploits vulnerabilities of the softwares that normaly correctly read the files to inject code and execute it.

So what, anything that is data with meaning is a program ?

The answer is more like "It's up to you!". Because the question is not interesting, it does not help understanding differences between things. A program is just some data that tells the computer what to do. But the "what to do" is decided by us. If we decide it's meaningless garbage, then the computer will do nothing with it.

What really matters

Be precise when you talk about things, use terms with clear definitions. We can reason about paradigms:

- procedural

- imperative

- functional

- declarative

- object oriented...

We can reason about usage:

- query data

- detect regular expression patterns

- do a lot of maths

- program a microcontroller

- describe an image using curves

- configure your http server...

All of these can be seen as programming, when doing them we express things in a language that is understandable by the computer (or more precisely: that a program can translate to the computer).

So stop being an elitist.

The only reason someone would try to differentiate programming from non-programming languages or activities is to separate people who program and people who "just write data". Because "programming" is considered a valuable job, while just feeding data to the machine is not (after all, we have GPT and other LLMs now, don't we ?).

But as we saw, processes and data are dual, you can represent data by "how to produce it" (e.g. procedural generation), and you can represent a process by what input (data) gives what output (still data).

In the end, a letter to santa really is a program.